Uncertainty in Deep Learning

Semester

Winter term

Language

English

Contents

Modern deep learning models achieve excellent performance across a wide range of tasks. However, they are also known to be overconfident when presented with novel data not encountered during training. This overconfidence can be particularly problematic in safety-critical applications such as healthcare, autonomous driving, and scientific discovery, where understanding a model’s confidence or Uncertainty in its predictions is essential.

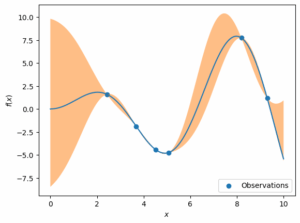

This course aims to equip students with both the theoretical foundations and practical tools needed to quantify and reason about Uncertainty in deep learning. The course begins with an introduction to the two primary types of Uncertainty: epistemic (model uncertainty) and aleatoric (data uncertainty). It explains why uncertainty quantification is critical in modern AI systems. Students are also introduced to model calibration, which involves aligning predicted probabilities with actual outcome frequencies, which is a crucial first step toward building trustworthy and reliable models. The course then covers a range of methods for uncertainty estimation, including Bayesian Neural Networks, Monte Carlo Dropout, Deep Ensembles, and Conformal Prediction. The final part of the course focuses on real-world applications, demonstrating how Uncertainty can improve model robustness and decision-making in domains such as active learning, scientific machine learning, and large language models.

The course contains the following chapters and topics:

- Introduction and Foundations

- Introduction and motivation to Uncertainty Quantification, sources of Uncertainty in deep learning models

- Foundational concepts in Probability and Statistics

- Review of Neural Network concepts for classification and regression

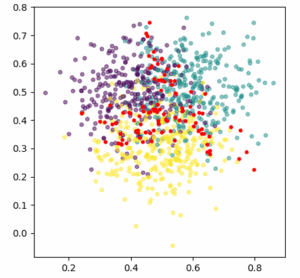

- Neural Network Calibration

- Calibration and overconfidence in neural networks

- Factors influencing calibration in modern neural networks

- Simple post-hoc techniques to calibrate models

- Practical considerations for choosing calibration methods

- Bayesian Deep Learning and Model Validation

- Introduction to the Bayesian Framework

- Variational Inference

- Bayes by Backprop

- Deep ensembles and Monte Carlo Dropout

- Evaluation Methods for Uncertainty

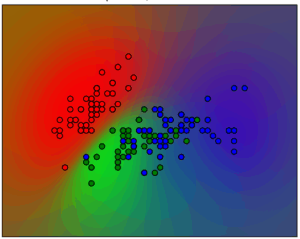

- Applications of Uncertainty in Active Learning

- Introduction to Active Learning

- Pool-based active learning

- Uncertainty sampling strategies: entropy, BALD, variation ratios, etc

- Query by committee and ensemble disagreement

- Advanced Topics

- Conformal Prediction for Distribution-Free Uncertainty

- Uncertainty in scientific machine learning: Physics Informed Neural Networks (PINN), Bayesian PINNs

- Uncertainty in LLMs and dealing with hallucinations

Prior knowledge of deep learning is highly recommended.

Module Data

Wahlpflichtmodul “Maschinelles Lernen” (3LP) für den M.Sc. Informatik